[Book Review] Fundamentals of Deep Learning 2nd Edition

- An honest review of Fundamentals of Deep Learning (2nd ed.) through depth and hands-on difficulty.

- You can quickly decide whether this book fits your current level or if a different path is better now.

Before We Begin

If you're interested in AI and want to know how to use Stable Diffusion or LLMs like OpenAI, or what's possible with them, I recommend studying prompt engineering rather than learning deep learning.

However, if you want to fundamentally understand how they work, this book could be a good choice. Note that this book has some difficulty.

It's better to read this after building foundational knowledge about deep learning. I've added recommended books at the bottom of the review.

Introduction

'Fundamentals of Deep Learning (2nd Edition)' focuses on the basics and essence of deep learning, allowing readers to acquire broad theoretical and practical knowledge. Initially, it covers the mathematical background knowledge needed to understand deep learning - linear algebra and probability - then details the basic principles of neural networks, the structure of feedforward neural networks, how to implement feedforward neural networks with PyTorch practice code, and how to train and evaluate feedforward neural networks on actual datasets. It also helps improve practical deep learning implementation capabilities through gradient descent, optimization, convolutional neural networks, image processing, variational autoencoders, and focuses on deeply understanding specific application areas and neural network architectures of deep learning.

The latter part provides theoretical and practical knowledge about sequence analysis models, generative models, and interpretability methodologies, explaining how to apply deep learning to each field by reflecting the latest trends. This book spans from deep learning basics to advanced content and is a perfect guide to mastering deep learning technology in one book, living up to its name as a 'fundamentals' text.What's New in the 2nd Edition

Linear algebra, probability, generative models, and interpretability methodologies have been added. The 1st edition used TensorFlow, but the 2nd edition uses PyTorch.

Summary

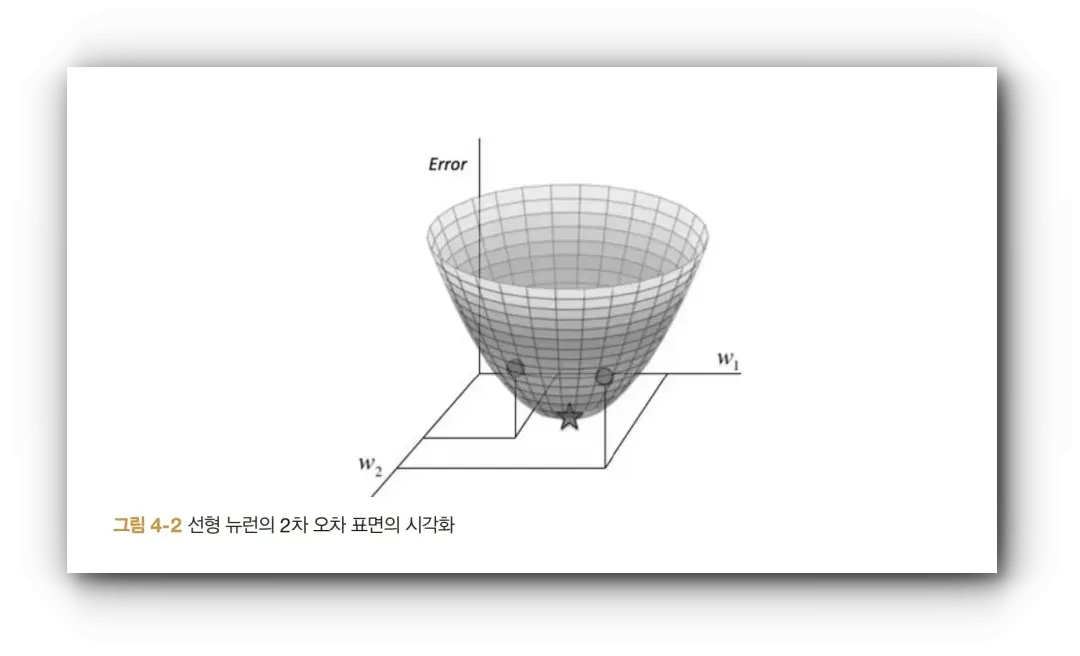

Chapters 1-2 cover the basics of linear algebra and probability. These chapters briefly explain the linear algebra and probability you need to know before studying machine learning.

Linear algebra covers matrix operations, vector operations, matrix-vector multiplication, spaces, and eigenvectors and eigenvalues. Probability basics cover probability distributions, conditional probability, random variables, expectation and variance.

Chapters 3-5 cover neural networks. They introduce basic concepts of machine learning and explain why deep learning is used to overcome the limitations of traditional computer programs, and artificial neural networks, which are concepts similar to neurons in the human brain.

From Chapter 5, gradient descent, backpropagation algorithm, test/validation/overfitting are introduced, and artificial neural networks are implemented using PyTorch.

(Using MNIST training dataset)

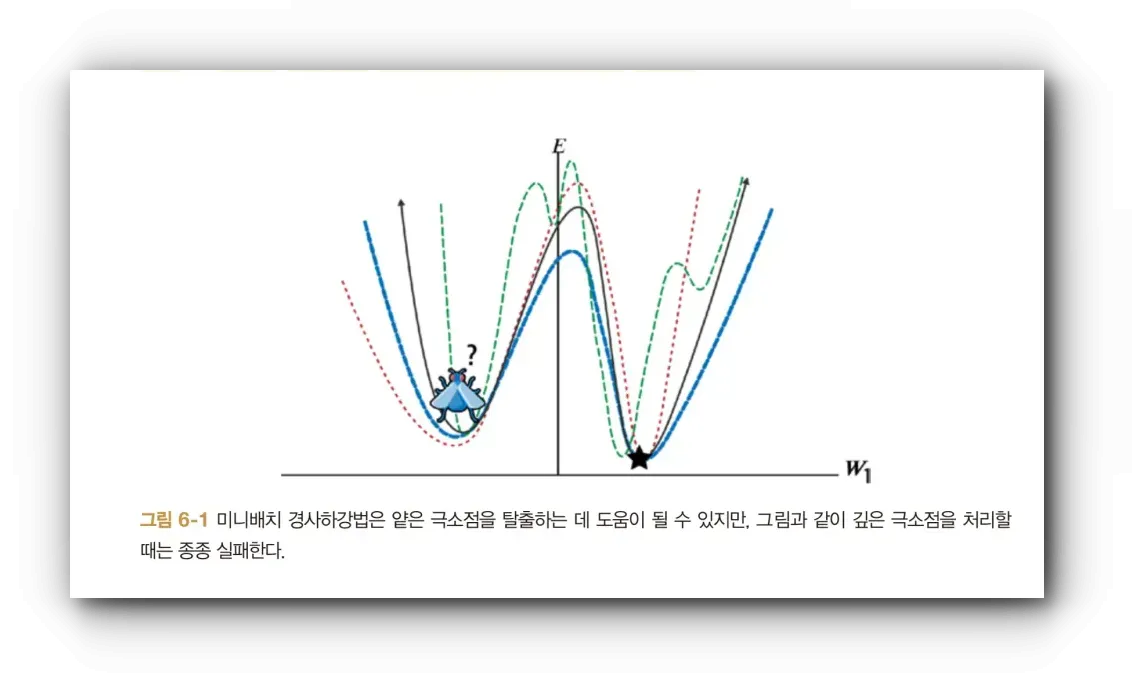

Chapter 6 covers gradient descent in more depth.

This chapter explains various optimization techniques for efficiently reaching the global minimum. For example, mini-batch gradient descent, mini-batch gradient with momentum, RMSProp, RMSProp with momentum, Adam, etc.

Chapter 7: Convolutional Neural Networks

Explains the limitations of traditional image analysis methods and the convolutional neural networks currently in use. Convolutional neural networks are mainly used for visual image analysis, speech recognition, and object image recognition.

In the ImageNet competition, the convolutional neural network architecture achieved a 16% error rate with just a few months of research the following year, compared to the 2011 winner's 25.7% error rate, creating innovation.

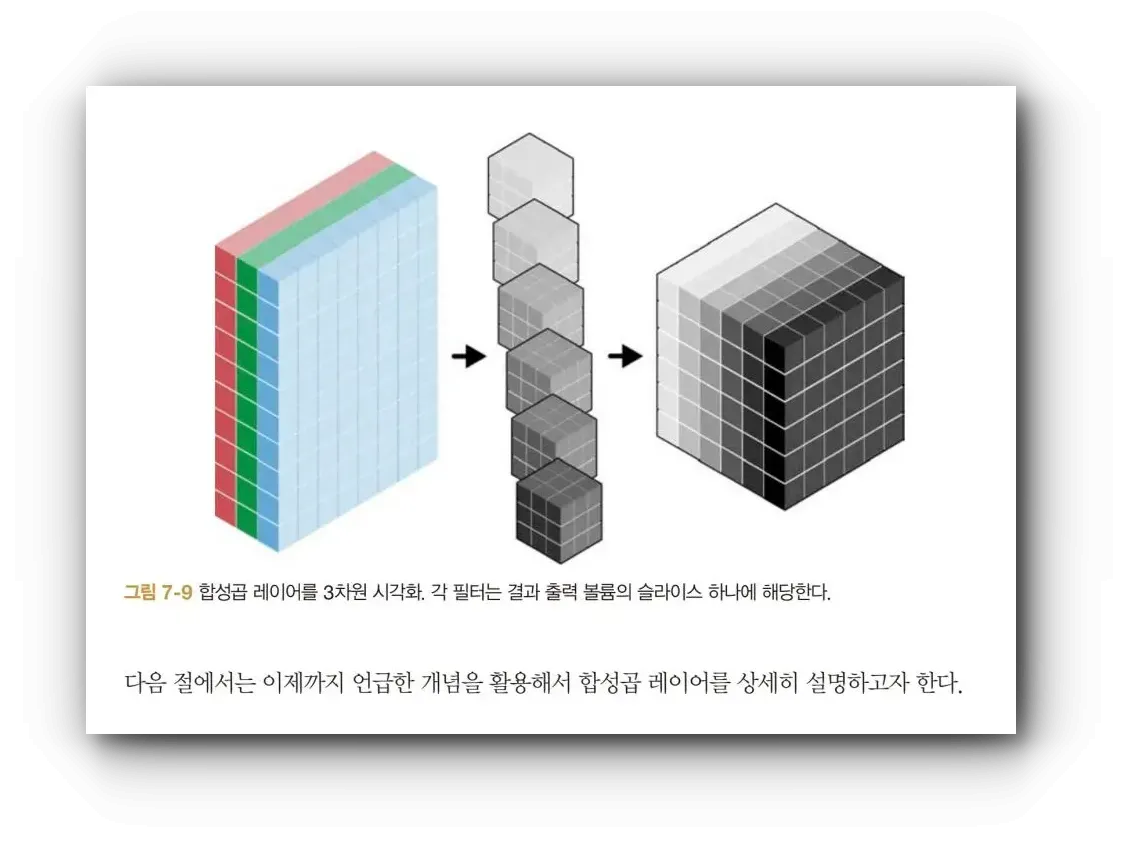

While basic deep neural networks have poor scalability as images get larger and cause overfitting problems, convolutional neural networks are inspired by how human vision works, with neurons arranged three-dimensionally and each layer having volume.

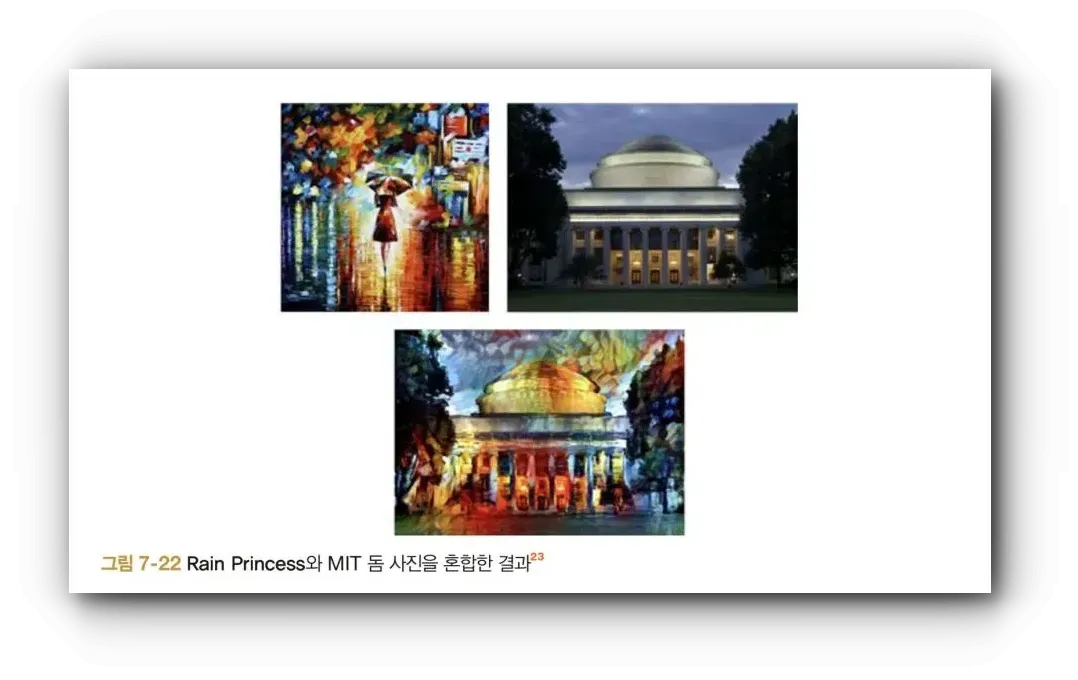

The last section is particularly interesting, showing results of using neural style algorithms for artistic style reproduction using convolutional filters to render photos in the style of famous artists.

After extracting stylistic characteristics from the left photo, they're applied to a regular portrait to generate new images like the one below.

Chapter 8: Embeddings and Representation Learning

Explains methods for generating embeddings. While I've used LLMs and LangChain to embed data as vectors frequently, I didn't know much about the actual generation process, so this was interesting.

Chapter 10: Generative Models

Previous chapters discussed discriminative models, and this chapter covers generative models.

GAN (Generative Adversarial Network) is a model where two neural networks, Generator and Discriminator, compete and learn from each other. The Generator tries to create fake data indistinguishable from real data, while the Discriminator tries to determine whether received data is real or fake. Through this process, the Generator gradually creates more realistic data.

VAE (Variational Autoencoder) is a model that learns latent spaces that well represent input data. VAE aims for efficient compression and generation of data, with a structure that maps input data to latent variables and then reconstructs them back to original data. VAE can generate new data by modeling the probabilistic characteristics of data.

Chapter 13: Reinforcement Learning

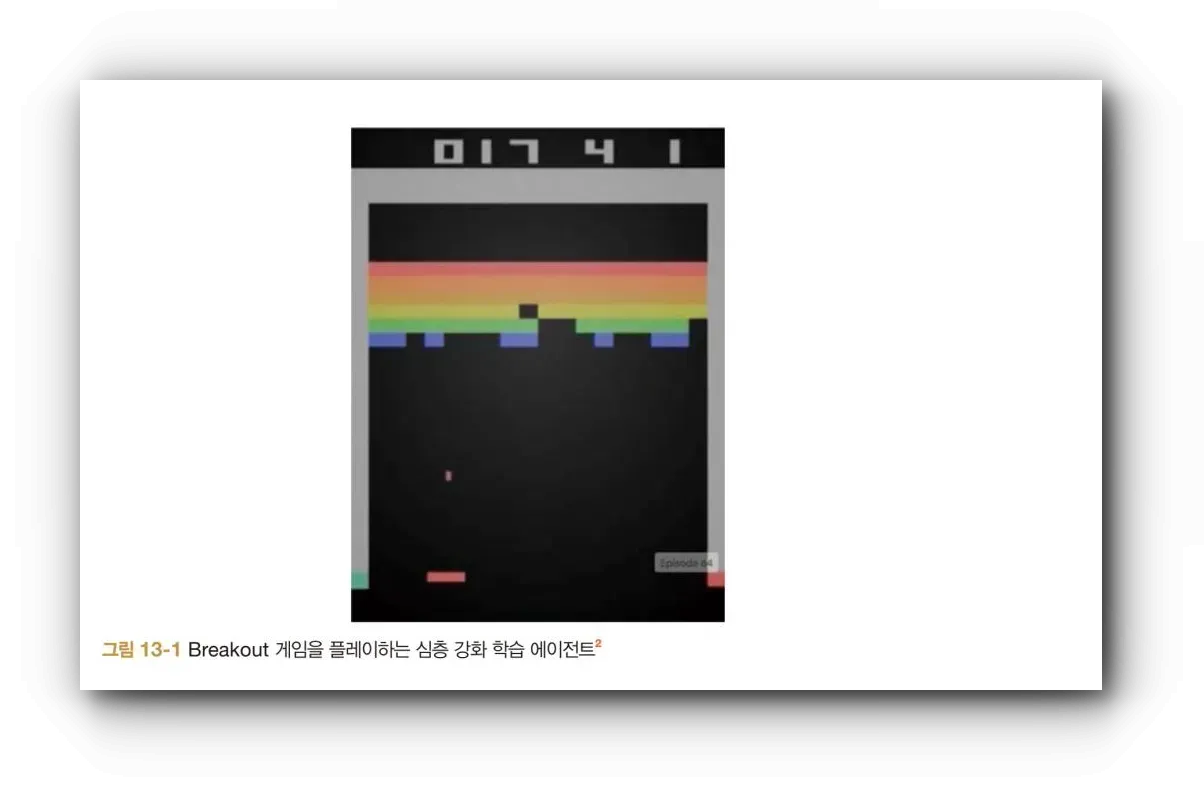

Covers reinforcement learning, which has received much attention since AlphaGo's success.

Along with examples of developing reinforcement agents using OpenAI Gym, it introduces why DeepMind used DQN and improvements like DRQN, A3C, UNREAL that address the problem of needing retraining for each new game.

Review

Reading up to Chapter 8 gave me headaches due to page pressure, so I skipped Chapter 9 (Sequence Analysis), Chapter 11 (Interpretable Methodologies), Chapter 12 (Memory-Augmented Neural Networks) and looked at interesting chapters first. I plan to read the rest later :)

While working on AI-related feature projects, I just started using PyTorch recently and wasn't familiar with it, but Chapter 5 was very helpful, especially the explanation of PyTorch tensor properties and operations was very well organized.

The book being in color is great.

Related books and courses Hanbit Media recommends the following books to read together:

- Deep Learning from Scratch 4

- Hands-On Machine Learning (3rd Edition)

- Deep Learning with Keras

Personally, I recommend the online course Coursera's Machine Learning Specialization by Professor Andrew Ng. Many AI and AGI related courses are also available on Deeplearning.ai created by Professor Andrew Ng.

"This review was written after receiving the book for Hanbit Media's <I am a Reviewer> activity."![[Book Review] Deep Learning from Scratch 5](/_astro/review-deep-learning-from-scratch-5-20241127222202059._qRDEZXv.webp)

![[Book Review] Quick Start Guide to Practical LLM](/_astro/review-quick-start-guide-to-llm-20240323135848844.DD89iW6Z.webp)

![[Book Review] Essential Math for Developers](/_astro/review-essential-math-for-data-science-20240627155310251.BhKlJd0p.webp)