[Book Review] LLM Service Design and Optimization - A Guide to Building and Operating AI Services with Lower Costs and Maximum Performance

- A review of LLM service design/optimization with a cost-performance-operations lens.

- Highlights system-level decisions that matter more than model choice alone in production.

"This review was written after receiving the book for Hanbit Media's <I am a Reviewer> activity."LLM Service Design and Optimization

The book I'm reviewing this time is 'LLM Service Design and Optimization'.

Since the emergence of LLMs, countless AI features have been sprouting in various services. I've also been working on AI-related tasks for the past 2 years at my company, including image generation using diffusion models and content generation AI using LLMs. Reading this book, I found many points that resonated with my experiences and learned a lot. I think AI service development has now entered a transitional phase. While many people may be experiencing confusion due to the lack of defined standards for AI service system design and logic, this book should help establish some guidelines before exploring the latest trends.

Author Introduction

Dr. Shreyas Subramanian is a Principal Data Scientist at Amazon Web Services who has made significant contributions and important developments in AI and machine learning fields. His books on AWS machine learning certification and machine learning-powered high-performance computing are Amazon AI field bestsellers in the top 10, proving his expertise. Dr. Subramanian has also authored several influential research papers, enriching AI academic discourse. The patents he has developed in recent years further demonstrate his innovative achievements. Additionally, Dr. Subramanian shares knowledge and insights on topics such as deploying machine learning models to Amazon SageMaker, large-scale model training, and building serverless inference functions through the AWS machine learning blog, demonstrating his ability to simplify complex concepts for broader audiences.

Book Introduction

Learning How to Build Cost-Effective Apps Using Large Language Models

In "Large Language Model-Based Solutions: How to Deliver Value with Cost-Effective Generative AI Applications," Shreyas Subramanian, Principal Data Scientist at Amazon Web Services, provides a practical guide for developers and data scientists who want to build and deploy cost-effective Large Language Model (LLM) based solutions. This book broadly covers key topics including model selection methods, data preprocessing and postprocessing, prompt engineering, and instruction fine-tuning.

Perfect for developers and data scientists interested in deploying foundation models or business leaders looking to scale GenAI usage, this book will also be helpful for project leaders and managers, technical support staff, and managers with interests or stakes in this topic.

Book Review

Table of Contents

Chapter 1: LLM Foundations

Explains the history and concepts of LLMs. What makes this book different from others is that from Chapter 1, it covers cost calculation snapshots, benchmark results, and concepts in more detail. Beyond LLM concepts, it explains infrastructure layer, model layer, and application layer needed for AI service construction separately. It also discusses cost optimization that must be considered during actual service deployment.

Chapter 2: Tuning Techniques for Cost Optimization Chapter 2 immediately dives into tuning techniques. It covers modern methods like P-tuning and LoRA, explaining with tables how astronomical costs for full training can be reduced through these methods.

Chapter 3: Inference Techniques for Cost Optimization

Presents inference model optimization methods like prompt engineering, caching, and batching. It introduces traditional(?) methods like zero-shot, few-shot, COT, and caches using vector stores, as well as various methods including batching prompts and chaining using LangChain, covering how to combine these to build overall services.

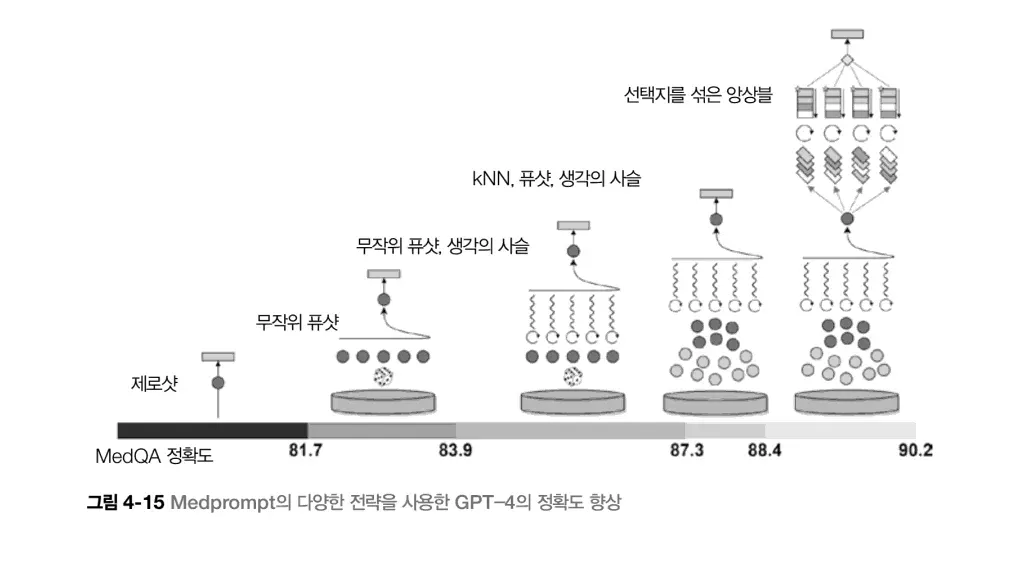

Chapter 4: Model Selection and Alternatives

Provides some guidance on model selection. It covers Small Language Models (SML) and Large Language Models (LLM), and discusses domain-specific models for particular fields. It covers how to specialize in specific domains by training custom tokenizers, and conversely, explains whether general models can achieve similar performance to domain-specific models through prompt engineering without fine-tuning.

Please note that it covers broader categories rather than specific models like claude-3.7-sonnet, gpt-4, gpt-3.5, gpt-o3.

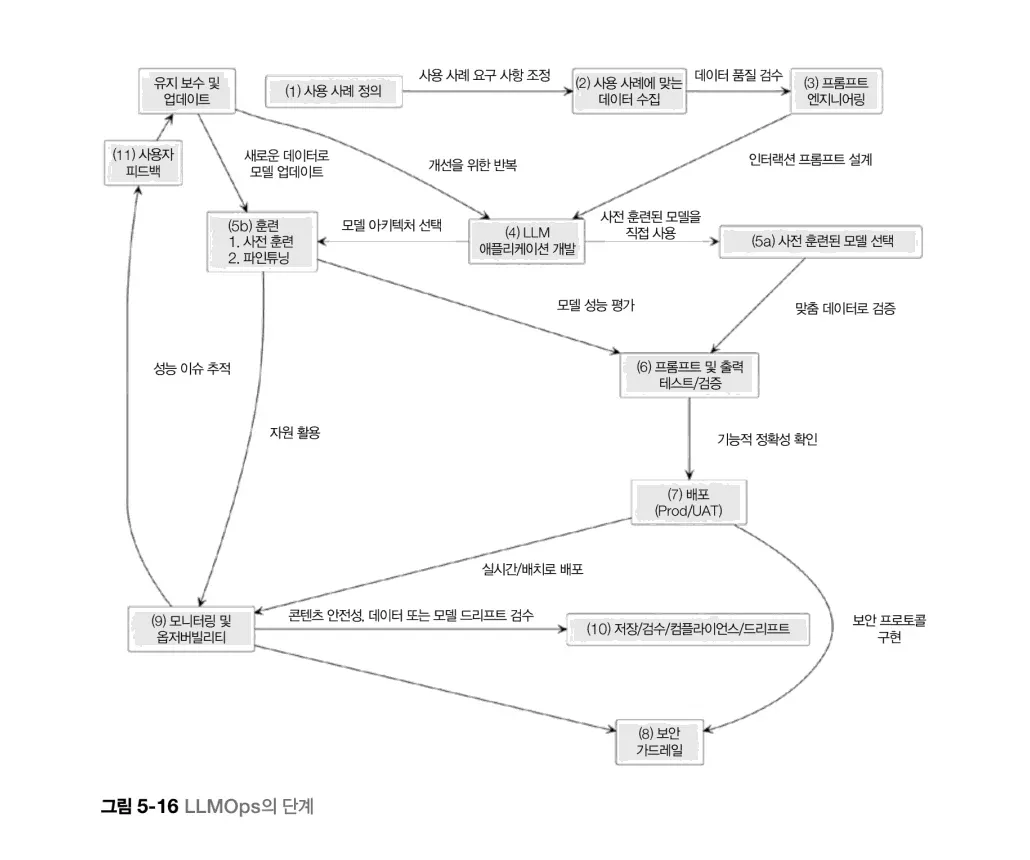

Chapter 5: Infrastructure and Deployment Tuning Strategies

Explains memory, caching, cost, and optimization strategies. It also covers LLM monitoring, introducing it as LLMOps. It summarizes the overall steps as shown in the image below. It provides practical tips good to know when providing generative AI application services.

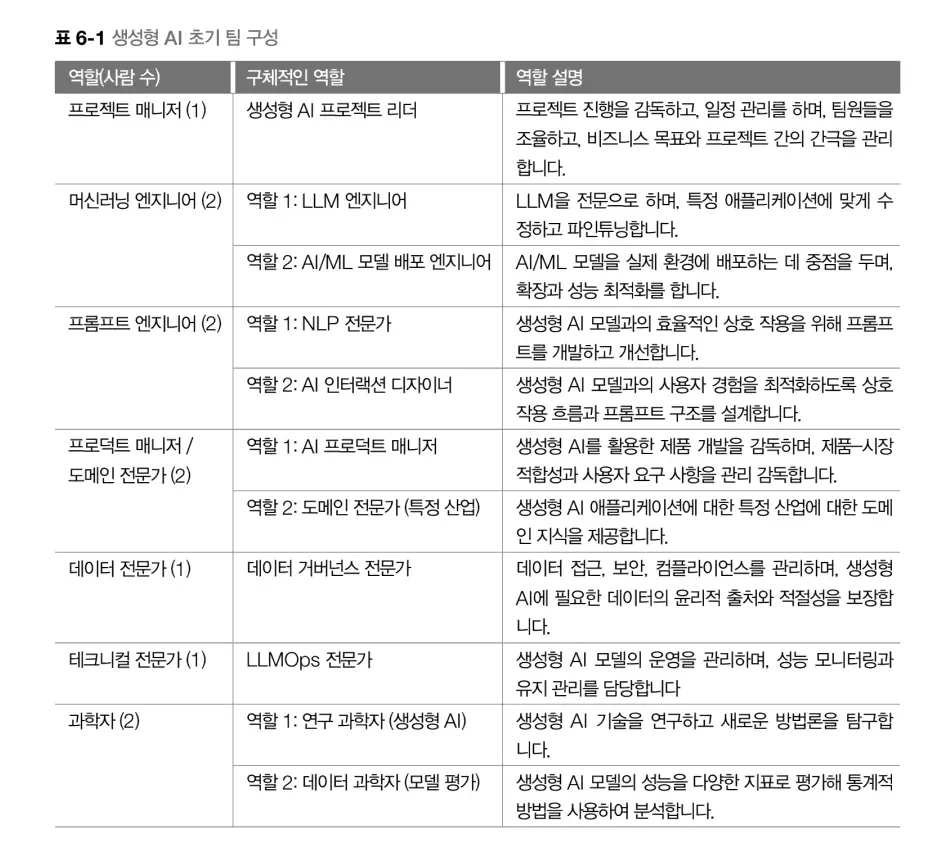

Chapter 6: Keys to Successful Generative AI Adoption

Starts with discussion about balancing performance and cost, explaining details good to see in advance when building generative AI teams. It also discusses future trends. It explains Mixture of Experts (MoE), multimodal, and agents as future trends and concludes. Actually, rather than future trends, these seem to be the hottest areas right now, which seemed odd, but since the original book was first published in May last year, they were probably classified as future trends.

While other LLM-related books allocate quite many pages to explaining history from word2vec to LLM, this book briefly passes through such parts and focuses more on cost, optimization, performance improvement, and tuning - areas that will be pondered in actual work.

Target Audience

The book shows target audience at the beginning. As you can see from the reader list, this is not a book for beginners covering simple content. While it does cover prompt engineering content, it deals more with service construction, cost, and optimization rather than prompt engineering details.

For this reason, I recommend reading it when you have some knowledge or when building AI-related teams.

Conclusion

As explained in Chapter 6, since this book came out in May last year, the information is not up to date. For example, while there are good services like https://artificialanalysis.ai/ for model comparison, it launched in Q3 last year, after the book was published, so it's not mentioned in the book.

Especially since AI currently has something new coming out every other day, rather than getting the latest information through this book, it would be good to establish guidelines on how to build teams and configure infrastructure, then look into the latest trends again.

![[Book Review] Quick Start Guide to Practical LLM](/_astro/review-quick-start-guide-to-llm-20240323135848844.DD89iW6Z.webp)

![[Book Review] Learning LangChain: Implementing RAG, Agents, and Cognitive Architecture with LangChain and LangGraph](/_astro/review-learning-langchain-20250629212214926.MxZbPAfN.webp)

![[Book Review] Effective Machine Learning Teams](/_astro/review-effective-machine-learning-teams-20250727141827319.BA1oVm6S.webp)