[Book Review] Quick Start Guide to Practical LLM

- A review of a practical LLM quick-start book with focus on speed-vs-depth trade-offs.

- Helps you decide whether it is the right fit for getting a fast but useful mental model.

Quick Start Guide to Large Language Model

This book was published by O'Reilly in October 2023. I was worried it might be outdated due to the rapid development of LLMs, but this book focused on fundamental aspects of LLMs.

Target Audience

This book is suitable for those interested in LLMs or those starting to develop related services. It's a book that shows notable effort to explain things simply and easily.

In Fundamentals of Deep Learning 2nd Edition Review, I mentioned that "if you're interested in AI and want to know how to use Stable Diffusion or LLMs like OpenAI, or what's possible with them, it's better to study prompt engineering rather than learning machine learning or deep learning." I think this was referring to books like this one.

Book Introduction

This book introduces the development process and operating principles from neural language models to LLMs. Starting with the Transformer deep learning model announced by Google's team in 2017, it provides detailed explanations despite LLM history not being very long.

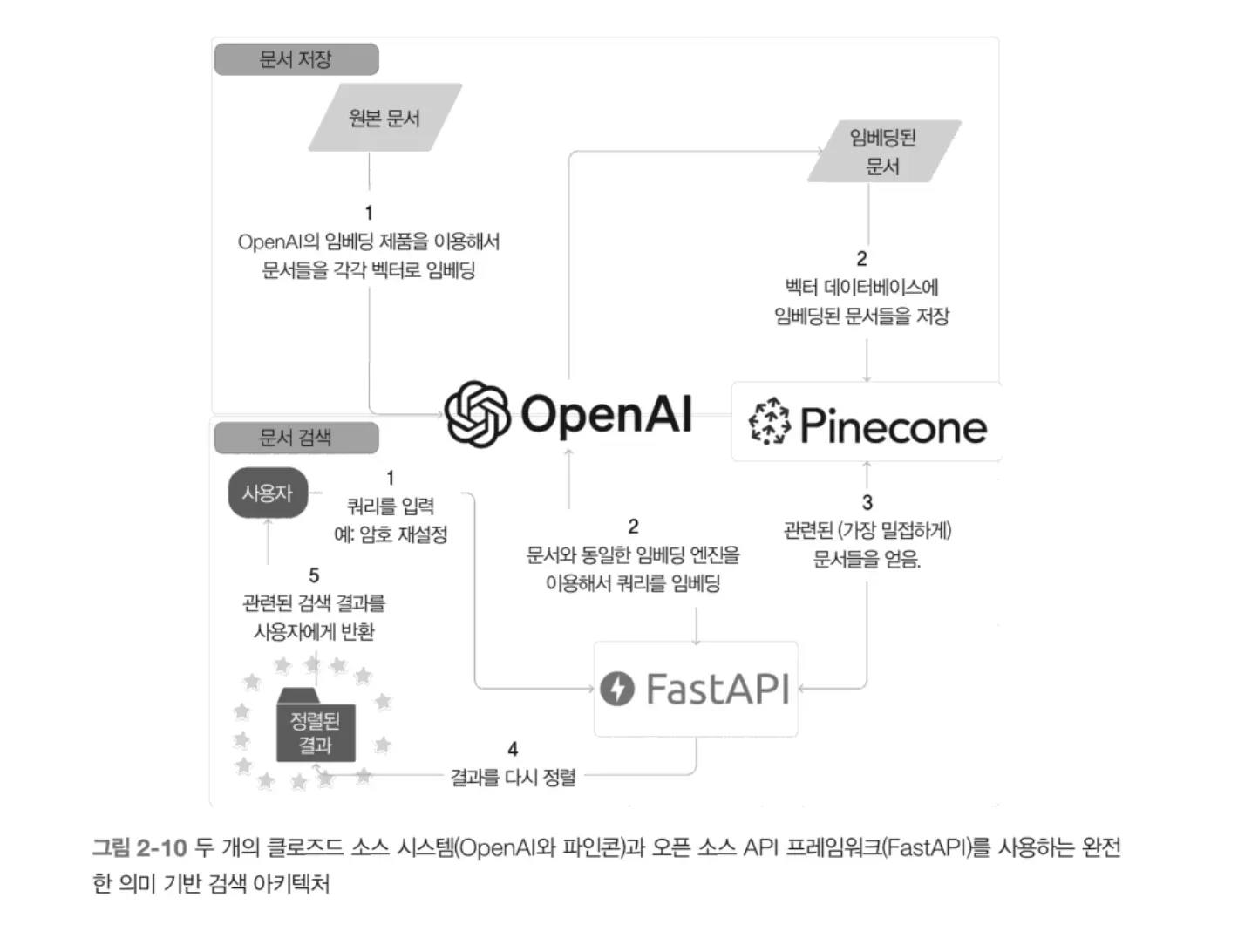

It discusses semantic search and basic prompt engineering. It explains fundamental knowledge and expands from there. For example, in the Semantic Search chapter, it also covers text embeddings, vector databases, Cosine Similarity, etc. It also introduces document chunking needed for RAG, vector databases, prompt engineering strategies, persona specification, etc.

Part 1 provides basic insights for building AI services.

Part 2 focuses on advanced techniques. While basic services like QA and chatbots are mostly sufficient with Part 1 content, when developing more complex or difficult services, you need to refer to Part 2's fine-tuning. It explains prompt chaining and discusses advantages like preventing prompt injection and prompt stuffing, as well as time consumption and efficiency degradation when models process overly complex prompts.

Part 3 covers advanced LLM usage. It introduces advanced fine-tuning techniques for achieving excellent results like GPT-4 using open source models or lower version models, and the importance of data preparation and feature engineering. As a fine-tuning example, it presents how to achieve GPT-3-like performance by defining reward models using GPT-2's 100 million parameters and through reinforcement learning.

Finally, this book's appendix contains very beneficial content. It includes LLM frequently asked questions (FAQ), LLM terminology explanations, and considerations when developing LLM applications. I personally recommend reading this part first before reading the book.

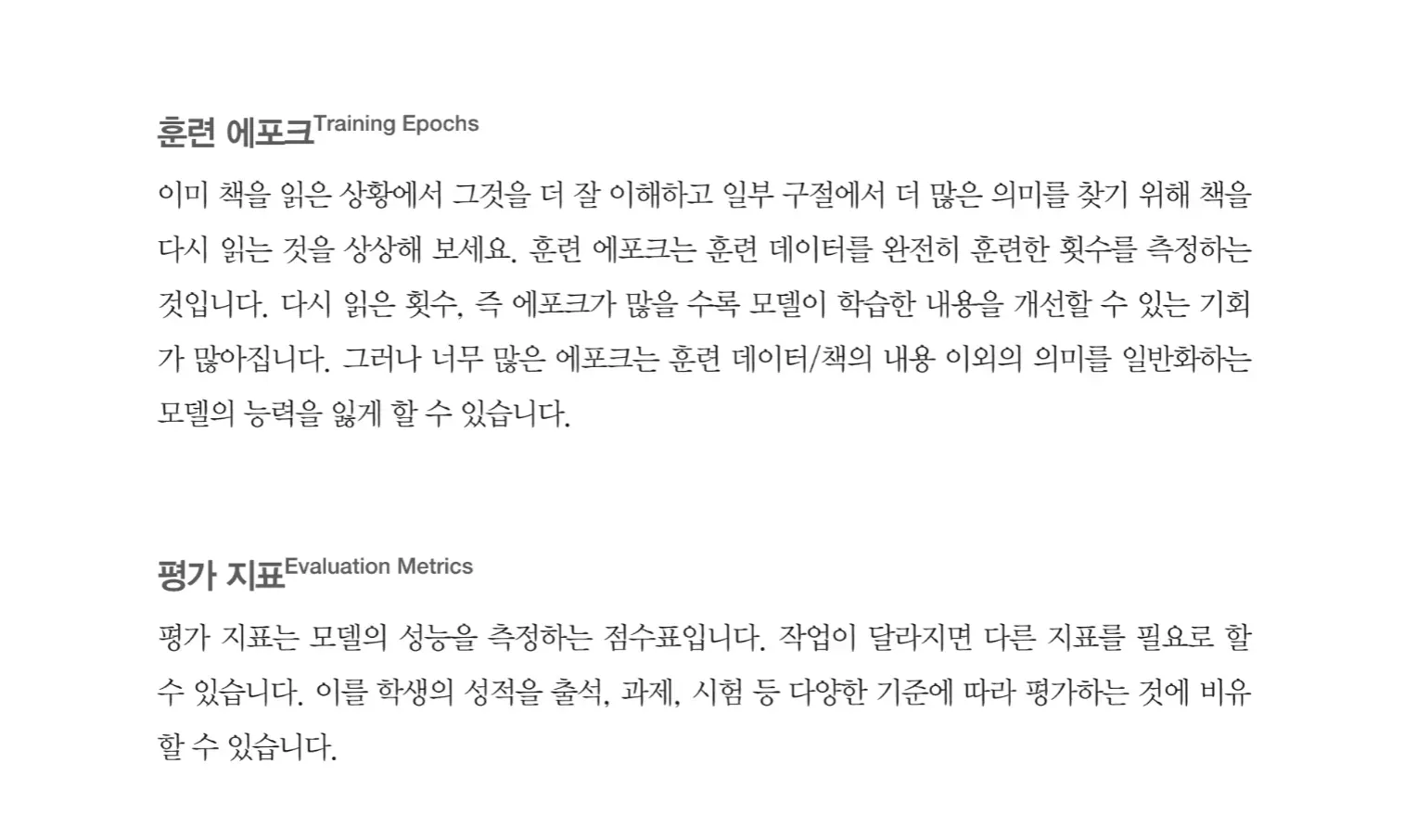

Especially the LLM terminology explanation is a metaphor treasure trove. As shown above, it uses appropriate metaphors for terms that could be difficult, making them easy to understand, which impressed me while reading. If you're starting to learn LLMs, you might get dizzy from unfamiliar AI terminology, so I recommend reading the appendix first.

Review

I recommend taking LLM-related courses at https://deeplearning.ai before reading this book.

This book doesn't talk about third-party tools like LangChain. While these tools can be useful, this book helps readers understand fundamental LLMs and says readers can make choices about third-party tools later.

"This review was written after receiving the book for Hanbit Media's <I am a Reviewer> activity."![[Book Review] LLM Service Design and Optimization - A Guide to Building and Operating AI Services with Lower Costs and Maximum Performance](/_astro/review-large-language-model-based-solutions-20250526192513013.ByDDXwMp.webp)

![[Book Review] Learning LangChain: Implementing RAG, Agents, and Cognitive Architecture with LangChain and LangGraph](/_astro/review-learning-langchain-20250629212214926.MxZbPAfN.webp)

![[Book Review] Fundamentals of Deep Learning 2nd Edition](/_astro/review-fundamentals-of-deep-learning-2nd-edition-20240217134412538.Dmvk9gCE.webp)